So after looking and testing various methods around the lab and exploring multiple ways to map an NAS or a file share, we noticed through testing that y-cruncher likes to have Direct IO control of the disks it is working with not just the swap disks, but also the file output directory. Unfortunately, even Kevin does not have a 183TB Flash drive on his janitor-sized key ring of flash drives (yet).

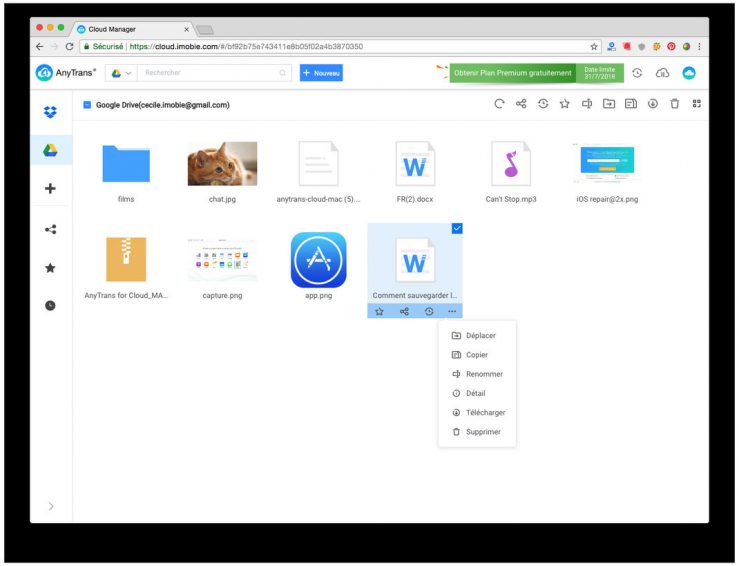

#ANYTRANS FOR CLOUD REVIEW HOW TO#

Thankfully this is StorageReview, and if there is one thing we know how to do, it’s store lots of data with excessive amounts of stress. The math is quite simple, 1 Pi digit = 1 byte, and having 100 Trillion decimal digits meant we needed 100TB for that and an additional 83TB for the 83 Trillion Hexadecimal that would also be calculated. One of the first questions that came up when we were designing our rig for this test was, “How are we going to present a contiguous volume large enough to store a text file with 100 Trillion digits of Pi?”(This is definitely a direct quote that we totally said). While the total hardware might seem extreme, the cost to buy our hardware outright is still a fraction of running the same workload in the cloud for six months. Here are the specifications of our compute system: Like a match made in heaven, we built out a high-performance server with just under 600TB of QLC flash and a unique high-availability power solution. At, we have access to some of the latest and greatest hardware in the industry, including AMD EPYC 4th gen processors, Solidigm P5316 SSDs, and obscene amounts of lithium batteries.

We were impressed by the achievement of Emma and the Google Cloud, but we also wondered if we could do it faster, with a lower total cost. In the end, that run also would have had a massive cloud compute and storage invoice, combined with the mounting momentum for organizations to bring specific workloads back on-prem, which gave us an interesting idea…

They used a program called y-cruncher running on Google Cloud’s Compute Engine, which took about 158 days to complete and processed around 82 petabytes of data. Last year, Google Cloud Developer Advocate Emma Haruka Iwao announced that she and her team had calculated Pi to 100 trillion digits, breaking her previous record of 31.4 trillion digits from 2019. As of today, StorageReview has matched their number and done so in a fraction of the time. Up to now, Google’s Cloud has held the world record for the largest Pi solve at 100 trillion digits. Calculating infinite Pi isn’t just a thrilling quest for mathematicians it’s also a way to put computing power and storage capacity through the ultimate endurance test. Pi represents the ratio of a circle’s circumference to its diameter, and it has an infinite number of decimal digits that never repeat or end.

0 kommentar(er)

0 kommentar(er)